Definition

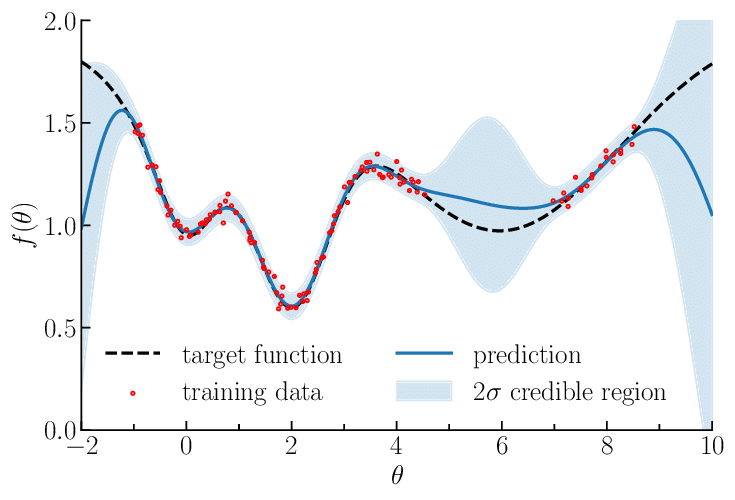

Gaussian Process Regression (GPR) is a non-parametric Bayesian approach to regression provides uncertainty estimates in addition to point estimates. GPR assumes that the values of the function at different points are jointly Gaussian distributed.

Consider a training dataset , and a test points (Assume the ‘s have mean). The task of GPR is providing predictive distributions on the test points.

The model assume that the observed target values are noisy version of the underlying true function . where is Gaussian noise with variance .

By The Gaussian process assumption, the observations and the true function outputs at given test points are jointly distributed as an -dimensional Multivariate Normal Distribution specified by Kernel Function .

Where:

- is the covariance matrix of all similarities where is a kernel function.

The posterior distribution over comes from conditioning Where:

The predictive mean gives the expected function values at the test points, and the predictive covariance matrix quantifies the uncertainty in the predictions.

The computational complexity of GPR is due to the matrix inversion required for calculating the posterior, making it less suitable for large datasets.

Kernel Function

The kernel function measures the similarity or correlation between two inputs and . The choice of the kernel function is crucial as it encodes prior assumptions about the properties of the function, such as smoothness and periodicity.

Examples

- RBF kernel assumes that nearby points are highly correlated, resulting in smooth function estimates.

- Periodic (Exp-Sine-squared) Kernel:

- Linear (Dot-product) kernel models linear relationships between inputs and outputs

Kernels can also be combined (e.g., by addition or multiplication) to create more complex and flexible covariance structures.